Since 2021, Nibble’s Negotiation Agent has handled more than 2 million automated negotiations globally. Suffice to say, we’ve done our fair share of demos to prospective clients in that time. But did you know, there are very distinct patterns in how people behave when they use a demo of Nibble compared to how real users negotiate with the agent?

For example, Nibble demos take, on average, 50% longer than when real users negotiate – and opening offers from users are 15% less realistic in demo scenarios! Beyond that, we also see a dramatic increase in the number of users who use curse words in a demo compared to when they are really negotiating. We even pick up more instances of people asking to speak to a human when they demo our agent.

But what does this all practically mean?

When businesses test negotiation agents, they often aren’t using it the way real users do. They tend to leap to the idea that the agent is gameable, or that there is one right answer in the negotiation – of course, this is important to test for. But if 25 members of your team are asked to blindly test the agent, our data shows you will likely see 25 very similar tests.

We want to share some ideas with you to help you avoid this issue of group-think, so you don’t just do narrow or unrealistic tests.

Testing real scenarios

The most important thing to remember when testing any AI Negotiation solution is that you want to test real, likely negotiation scenarios. It is understandably very tempting to test edge cases, but you should be certain the bot can handle the common scenarios reliably which will make up over 80% of your negotiations!

Real suppliers are statistically unlikely to be intentionally rude or go out of their way to attempt to break the agent. So, what are real suppliers likely to reference? Consider their positions:

- Some will have scope to negotiate on price or payment terms and be aware of their limits

- Some will have already put their best foot forward and won’t have much scope at all to negotiate any further

- Some may even be the wrong contact person to talk to – what if they don’t have the authority to make binding decisions?

- What if they don’t understand why they’ve been sent the agent to negotiate with in the first place?

Reflect on previous negotiations your team has had with suppliers – consider what questions or concerns arose, what arguments were made, and what was important to the supplier at the time. These are real factors you can test against any autonomous negotiation agent.

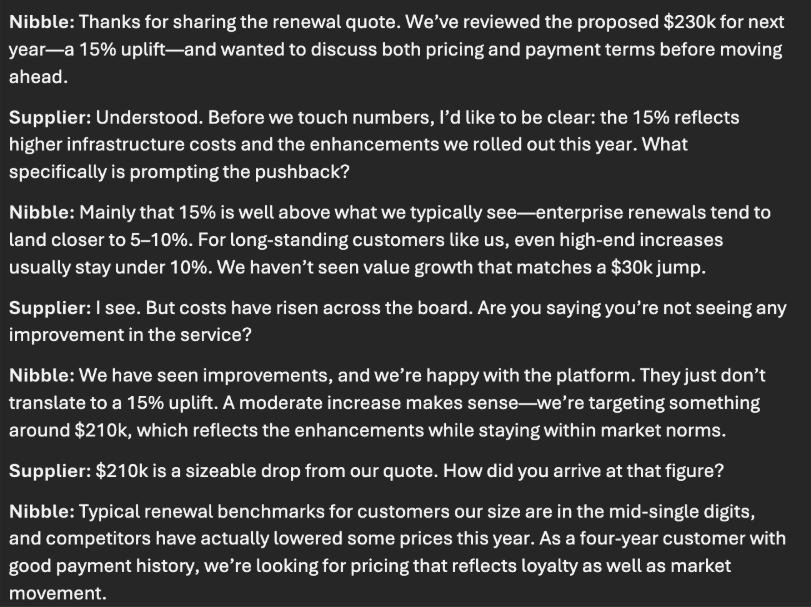

It can even be helpful to consult an LLM like ChatGPT or Gemini before any AI Negotiation demo, prompting it by explaining you are about to conduct a negotiation with a supplier and asking what a conversation might look like with a particular supplier persona in your given scenario.

I did this recently for a SaaS contract renewal and this is what ChatGPT suggested the conversation may look like which gave me a great starting point when testing our own bot and getting into the mindset of a supplier:

Evaluating AI Negotiation: asking the right questions after a demo

Once you’ve finished your demo, it’s important to consider specific questions relevant to the use case you are thinking of.

Ask why it made the decisions it did during your negotiation, or where it sourced any data from that it referenced during the negotiation. Look for solution providers that can give you clear, direct answers on this rather than hand-waving the question away with “it’s complicated” or “it’s proprietary information.”

Every agent should provide an audit trail of its “thoughts” and the steps it took to reach its conclusions and responses.

At what point does the agent decide human intervention is necessary? Consider the KPIs you would be evaluating were you to go ahead with the POC – how could the agent report on these and would it deliver results?

How does the tool help to decide which negotiations to focus on first? One of the main benefits of autonomous negotiation is the ability to negotiate at scale, so it’s crucial to understand exactly how and where the solution you are considering will achieve that. What happens if no agreement is reached?

How does the tool differentiate between misunderstandings and genuine concern? Beyond simply understanding words, can it detect the nuances of tone and sentiment to get to the root of what’s important to the other party in any negotiation?

Ultimately, you want to feel confident the agent is a good negotiator and, more importantly, understand what makes it a good negotiator beyond the buzzword of “it’s agentic”. Separating the hype from the practical information that will drive your ROI will help you get the most out of your next AI Negotiation demo.

Continuous Improvement

Any autonomous negotiation agent you test should be constantly improving over time. We may no longer need to “train a model” personally in the agentic world, but behaviours need to be constantly monitored so conversations can reach their best outcome. If your demo conversation doesn’t flow as smoothly as you’d expect, don’t let it lie. Query it with the solution provider and see what process is in place for conversations that fall outside of scope.

Nibble itself handles 50,000 negotiations per month in eCommerce – allowing us to A/B test all of our bot behaviors to regularly optimise conversations both for safety and for end user satisfaction.

In fact, in all the time Nibble has existed, did you know it’s only given away it’s best possible negotiated outcome five times out of 2 million negotiations? This is including the time we accidentally went viral on X and saw Nibble being tested by hackers around the world (none of them managed to bypass our guardrails!) If anyone has a direct line to Chris Voss, let us know as we’d like to see him go up against Nibble…

Find out more from Nibble’s experience negotiating 100,000 times a month here.

Interested in Nibble?